4b会支持rkllm版本么?

-

有这个计划更新固件到支持rkllm么?有的话,大概什么时候可以看到

-

Description

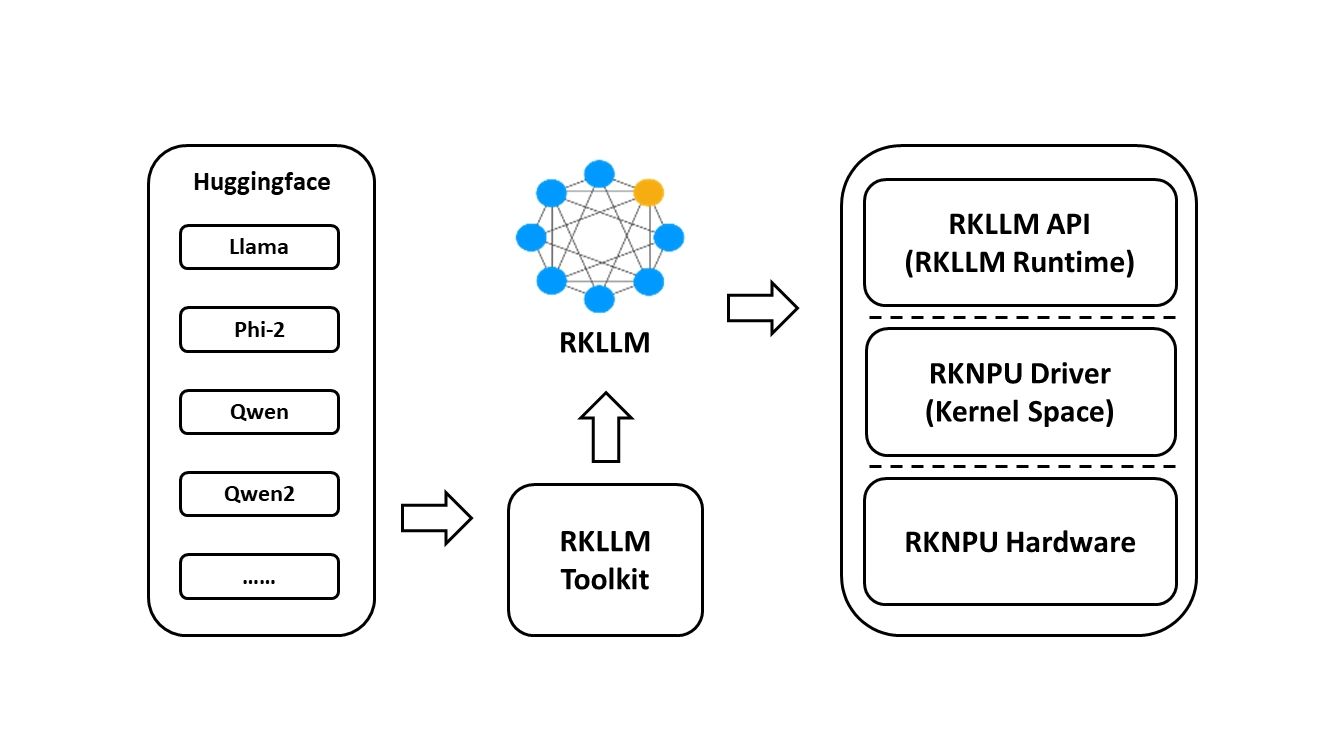

RKLLM software stack can help users to quickly deploy AI models to Rockchip chips. The overall framework is as follows:

In order to use RKNPU, users need to first run the RKLLM-Toolkit tool on the computer, convert the trained model into an RKLLM format model, and then inference on the development board using the RKLLM C API.

-

RKLLM-Toolkit is a software development kit for users to perform model conversionand quantization on PC.

-

RKLLM Runtime provides C/C++ programming interfaces for Rockchip NPU platform to help users deploy RKLLM models and accelerate the implementation of LLM applications.

-

RKNPU kernel driver is responsible for interacting with NPU hardware. It has been open source and can be found in the Rockchip kernel code.

Support Platform

- RK3588 Series

- RK3576 Series

Download

- You can also download all packages, docker image, examples, docs and platform-tools from RKLLM_SDK, fetch code: rkllm

RKNN Toolkit2

If you want to deploy additional AI model, we have introduced a new SDK called RKNN-Toolkit2. For details, please refer to:

https://github.com/airockchip/rknn-toolkit2

Notes

Due to recent updates to the Phi2 model, the current version of the RKLLM SDK does not yet support these changes.

Please ensure to download a version of the Phi2 model that is supported.CHANGELOG

v1.0.0-beta

- Supports the conversion and deployment of LLM models on RK3588/RK3576 platforms

- Compatible with Hugging Face model architectures

- Currently supports the models LLaMA, Qwen, Qwen2, and Phi-2

- Supports quantization with w8a8 and w4a16 precision

-

-

@airobot机器人开发

目前还是beta版本,等后续的release版本再考虑移植。如果有兴趣可以自己尝试移植,里边最主要的步骤是X86上面的模型转换过程。需要更详细资料可以邮件索取:george@cool-pi.com -

@george 感谢

-

我自己是了一下qwen1.8b,是没问题的,但是换了4b会提示要升级npu驱动,

然后我看到官方的仓库里有放出npu driver,就是不知道如何可以在板子上,直接编译,安装的方法

-

@george 这个huggingface上有兄弟上传了,我下载用了没问题

-

@shengxia 我试了不行,请问你用的哪个版本的固件?我试了加载没问题,但是实际运行就是乱输出,有时候是乱码,有时候就是乱输出一通。

-

原来我自己转换的模型有问题,虽然用工具转换成功了,但是运行起来后都是乱码。不知道是否和转换时参数设置有关系

-

@airobot机器人开发

你手上有完整的SDK吗,我邮件发一份给你,里边有比较详细的文档。 -

@george 有的,感谢。

-

请问可以也发我一份资料吗? 下载链接提供的模型,输出也有乱码问题。 我又尝试了下自己转换,但是rknn-llm安装whl也有问题,提示utf8编码无法解码文件。